Institute Output

What Ultimately Is There? Metaphysics and the Ruliad

Stephen Wolfram

“What ultimately is there?” has always been seen as a fundamental—if thorny—question for philosophy, or perhaps theology. But despite a couple of millennia of discussion, I think it’s fair to say that only modest progress has been made with it. But maybe, just maybe, this is the moment where that’s going to change—and on the basis of surprising new ideas and new results from our latest efforts in science, it’s finally going to be possible to make real progress, and in the end to build what amounts to a formal, scientific approach to metaphysics.

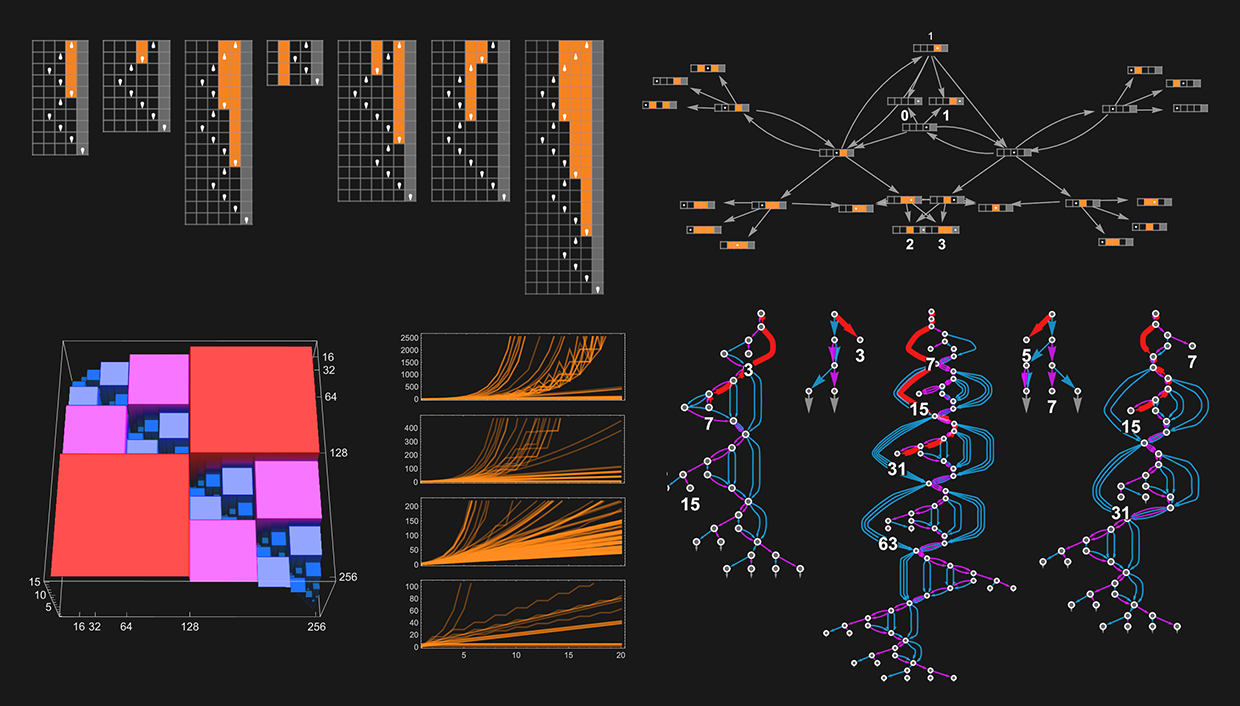

P vs. NP and the Difficulty of Computation: A Ruliological Approach

Stephen Wolfram

“Could there be a faster program for that?” It’s a fundamental type of question in theoretical computer science. But except in special cases, such a question has proved fiendishly difficult to answer. And, for example, in half a century, almost no progress has been made even on the rather coarse (though very famous) P vs. NP question—essentially of whether for any nondeterministic program there will always be a deterministic one that is as fast. From a purely theoretical point of view, it’s never been very clear how to even start addressing such a question. But what if one were to look at the question empirically, say in effect just by enumerating possible programs and explicitly seeing how fast they are, etc.?

What Is Ruliology?

Stephen Wolfram

Ruliology is taking off! And more and more people are talking about it. But what is ruliology? Since I invented the term, I decided I should write something to explain it. But then I realized: I actually already wrote something back in 2021 when I first invented the term. What I wrote back then was part of something longer. But here now is the part that explains ruliology.

What’s Special about Life? Bulk Orchestration and the Rulial Ensemble in Biology and Beyond

Stephen Wolfram

It’s a key feature of living systems, perhaps even in some ways the key feature: that even right down to a molecular scale, things are orchestrated. Molecules (or at least large ones) don’t just move around randomly, like in a liquid or a gel. Instead, what molecular biology has discovered is that there are endless active mechanisms that in effect orchestrate what even individual molecules in living systems do. But what is the result of all that orchestration? And could there perhaps be a general characterization of what happens in systems that exhibit such “bulk orchestration”?

The Ruliology of Lambdas

Stephen Wolfram

It’s a story of pure, abstract computation. In fact, historically, one of the very first. But even though it’s something I for one have used in practice for nearly half a century, it’s not something that in all my years of exploring simple computational systems and ruliology I’ve ever specifically studied. And, yes, it involves some fiddly technical details. But it’ll turn out that lambdas—like so many systems I’ve explored—have a rich ruliology, made particularly significant by their connection to practical computing.

“I Have a Theory Too”: The Challenge and Opportunity of Avocational Science

Stephen Wolfram

Most physicists term people who send such theories “crackpots”, and either discard their missives or send back derisive responses. I’ve never felt like that was the right thing to do. Somehow I’ve always felt as if there has to be a way to channel that interest and effort into something that would be constructive and fulfilling for all concerned. And maybe, just maybe, I now have at least one idea in that direction.

What If We Had Bigger Brains? Imagining Minds beyond Ours

Stephen Wolfram

We humans have perhaps 100 billion neurons in our brains. But what if we had many more? Or what if the AIs we built effectively had many more? What kinds of things might then become possible? At 100 billion neurons, we know, for example, that compositional language of the kind we humans use is possible. At the 100 million or so neurons of a cat, it doesn’t seem to be. But what would become possible with 100 trillion neurons? And is it even something we could imagine understanding?

What Can We Learn about Engineering and Innovation from Half a Century of the Game of Life Cellular Automaton?

Stephen Wolfram

Things are invented. Things are discovered. And somehow there’s an arc of progress that’s formed. But are there what amount to “laws of innovation” that govern that arc of progress?

There are some exponential and other laws that purport to at least measure overall quantitative aspects of progress (number of transistors on a chip; number of papers published in a year; etc.). But what about all the disparate innovations that make up the arc of progress? Do we have a systematic way to study those?

Towards a Computational Formalization for Foundations of Medicine

Stephen Wolfram

As it’s practiced today, medicine is almost always about particulars: “this has gone wrong; this is how to fix it”. But might it also be possible to talk about medicine in a more general, more abstract way—and perhaps to create a framework in which one can study its essential features without engaging with all of its details?

Who Can Understand the Proof? A Window on Formalized Mathematics

Stephen Wolfram

For more than a century people had wondered how simple the axioms of logic (Boolean algebra) could be. On January 29, 2000, I found the answer—and made the surprising discovery that they could be about twice as simple as anyone knew. (I also showed that what I found was the simplest possible.)

On the Nature of Time

Stephen Wolfram

Time is a central feature of human experience. But what actually is it? In traditional scientific accounts it’s often represented as some kind of coordinate much like space (though a coordinate that for some reason is always systematically increasing for us). But while this may be a useful mathematical description, it’s not telling us anything about what time in a sense “intrinsically is”.

Foundations of Biological Evolution: More Results & More Surprises

Stephen Wolfram

A few months ago I introduced an extremely simple “adaptive cellular automaton” model that seems to do remarkably well at capturing the essence of what’s happening in biological evolution. But over the past few months I’ve come to realize that the model is actually even richer and deeper than I’d imagined. And here I’m going to describe some of what I’ve now figured out about the model—and about the often-surprising things it implies for the foundations of biological evolution.

Nestedly Recursive Functions

Stephen Wolfram

Integers. Addition. Subtraction. Maybe multiplication. Surely that’s not enough to be able to generate any serious complexity. In the early 1980s I had made the very surprising discovery that very simple programs based on cellular automata could generate great complexity. But how widespread was this phenomenon?

What’s Really Going On in Machine Learning? Some Minimal Models

Stephen Wolfram

It’s surprising how little is known about the foundations of machine learning. Yes, from an engineering point of view, an immense amount has been figured out about how to build neural nets that do all kinds of impressive and sometimes almost magical things. But at a fundamental level we still don’t really know why neural nets “work”—and we don’t have any kind of “scientific big picture” of what’s going on inside them.

Ruliology of the “Forgotten” Code 10

Stephen Wolfram

For several years I’d been studying the question of “where complexity comes from”, for example in nature. I’d realized there was something very computational about it (and that had even led me to the concept of computational irreducibility—a term I coined just a few days before June 1, 1984). But somehow I had imagined that “true complexity” must come from something already complex or at least random. Yet here in this picture, plain as anything, complexity was just being “created”, basically from nothing. And all it took was following a very simple rule, starting from a single black cell.

Why Does Biological Evolution Work? A Minimal Model for Biological Evolution and Other Adaptive Processes

Stephen Wolfram

Why does biological evolution work? And, for that matter, why does machine learning work? Both are examples of adaptive processes that surprise us with what they manage to achieve. So what’s the essence of what’s going on? I’m going to concentrate here on biological evolution, though much of what I’ll discuss is also relevant to machine learning—but I’ll plan to explore that in more detail elsewhere.

Can AI Solve Science?

Stephen Wolfram

Particularly given its recent surprise successes, there’s a somewhat widespread belief that eventually AI will be able to “do everything”, or at least everything we currently do. So what about science? Over the centuries we humans have made incremental progress, gradually building up what’s now essentially the single largest intellectual edifice of our civilization. But despite all our efforts, there are still all sorts of scientific questions that remain. So can AI now come in and just solve all of them?

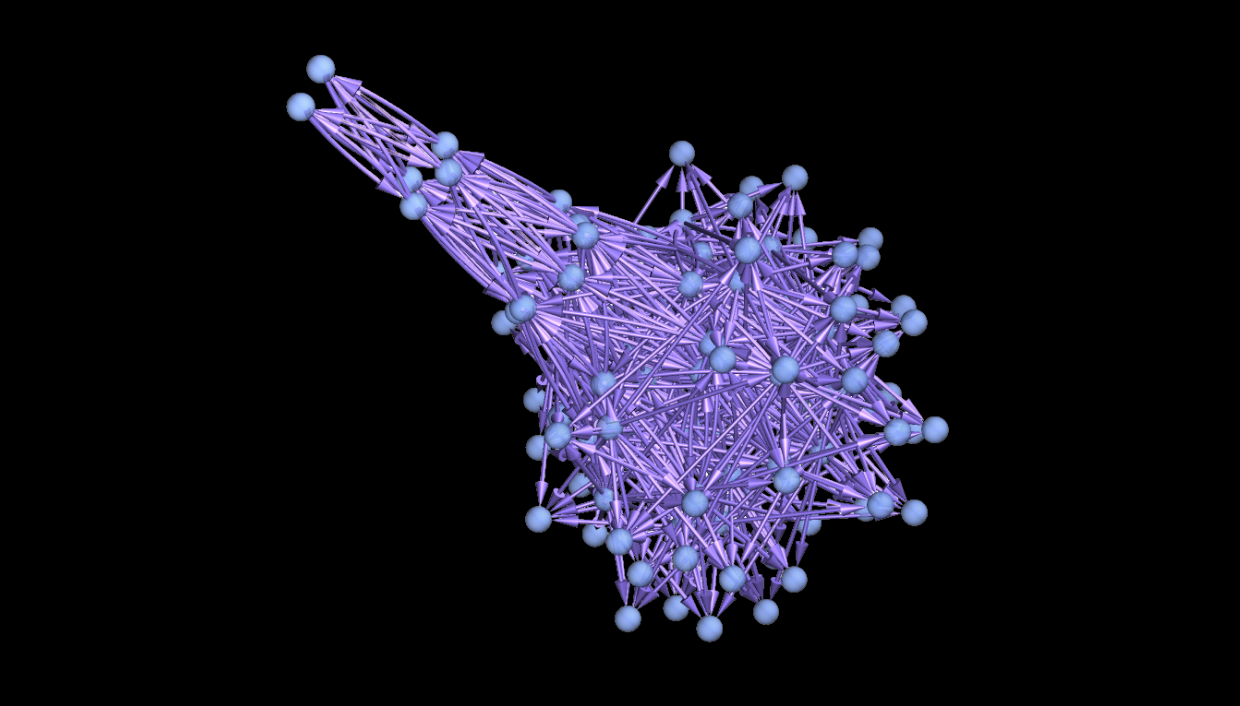

Observer Theory

Stephen Wolfram

We call it perception. We call it measurement. We call it analysis. But in the end it’s about how we take the world as it is, and derive from it the impression of it that we have in our minds.

Aggregation and Tiling as Multicomputational Processes

Stephen Wolfram

Multiway systems have a central role in our Physics Project, particularly in connection with quantum mechanics. But what’s now emerging is that multiway systems in fact serve as a quite general foundation for a whole new “multicomputational” paradigm for modeling.

Expression Evaluation and Fundamental Physics

Stephen Wolfram

It is shown that way the Wolfram Language rewrites and evaluates expressions mirrors the universe’s own evolution: both proceed through discrete events linked by causal relationships, form “spacetime-like” structures and branch into multiway histories analogous to quantum superpositions.